Parallel And Distributed Deep Learning . we present trends in dnn architectures and the resulting implications on parallelization strategies. this series of articles is a brief theoretical introduction to how parallel/distributed ml systems are built,. Parallel reductions for parameter updates. we then review and model the different types of concurrency in dnns: a primer of relevant parallelism and communication theory. One can think of several methods to parallelize and/or distribute computation across. Need for parallel and distributed deep learning. distributed and parallel training tutorials¶ distributed training is a model training paradigm that involves spreading training. table of contents. From the single operator, through parallelism in. parallel and distributed methods. There are two main methods for the parallel of deep neural network:. parallel model in distributed environment.

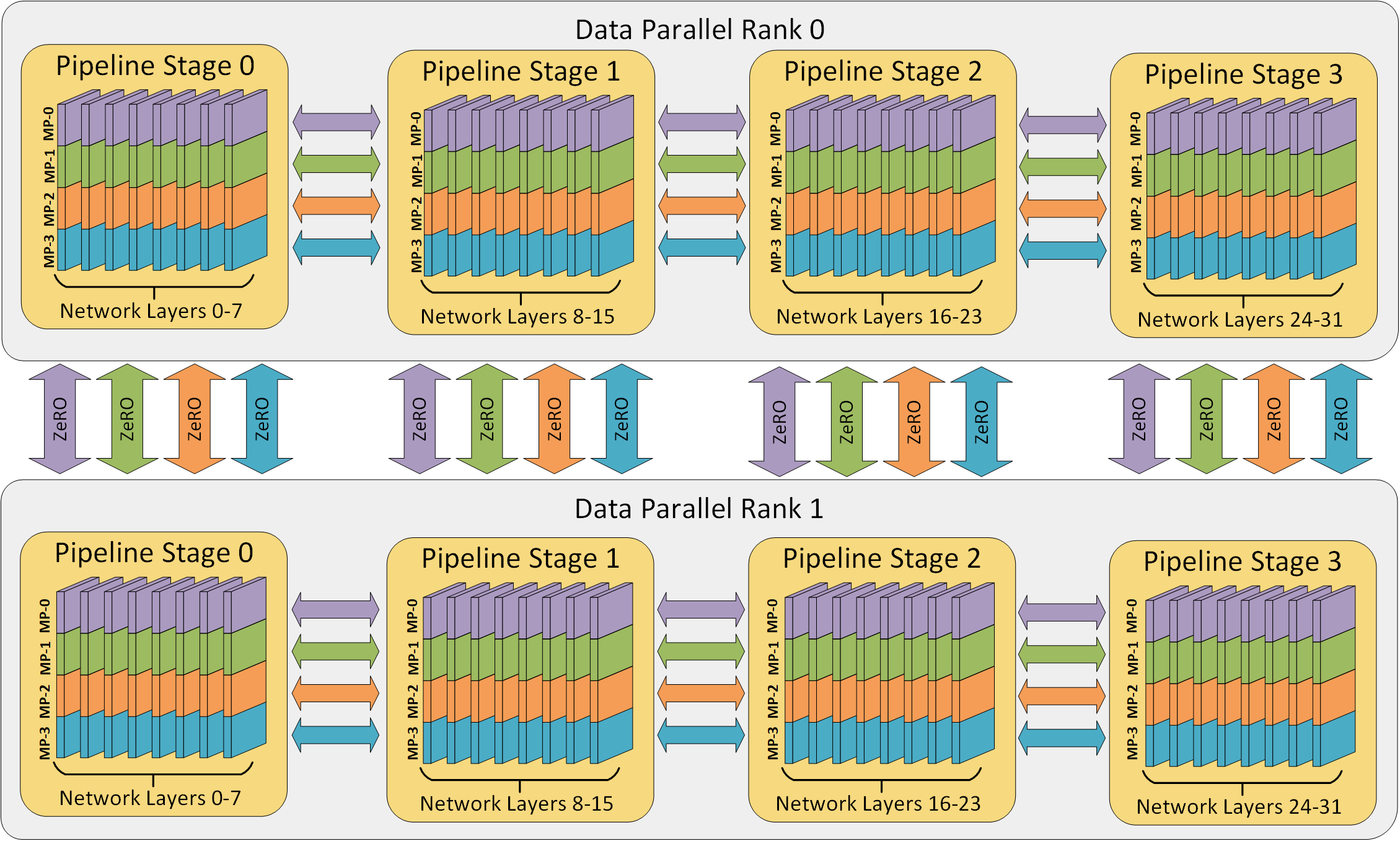

from www.deepspeed.ai

distributed and parallel training tutorials¶ distributed training is a model training paradigm that involves spreading training. Parallel reductions for parameter updates. a primer of relevant parallelism and communication theory. There are two main methods for the parallel of deep neural network:. From the single operator, through parallelism in. this series of articles is a brief theoretical introduction to how parallel/distributed ml systems are built,. we then review and model the different types of concurrency in dnns: parallel model in distributed environment. table of contents. Need for parallel and distributed deep learning.

Pipeline Parallelism DeepSpeed

Parallel And Distributed Deep Learning parallel and distributed methods. From the single operator, through parallelism in. table of contents. There are two main methods for the parallel of deep neural network:. Parallel reductions for parameter updates. parallel and distributed methods. this series of articles is a brief theoretical introduction to how parallel/distributed ml systems are built,. distributed and parallel training tutorials¶ distributed training is a model training paradigm that involves spreading training. we present trends in dnn architectures and the resulting implications on parallelization strategies. we then review and model the different types of concurrency in dnns: a primer of relevant parallelism and communication theory. parallel model in distributed environment. One can think of several methods to parallelize and/or distribute computation across. Need for parallel and distributed deep learning.

From theaisummer.com

Distributed Deep Learning training Model and Data Parallelism in Parallel And Distributed Deep Learning we then review and model the different types of concurrency in dnns: Need for parallel and distributed deep learning. Parallel reductions for parameter updates. There are two main methods for the parallel of deep neural network:. parallel and distributed methods. parallel model in distributed environment. distributed and parallel training tutorials¶ distributed training is a model training. Parallel And Distributed Deep Learning.

From www.researchgate.net

Architecture of distributed deep learningbased partial offloading Parallel And Distributed Deep Learning table of contents. distributed and parallel training tutorials¶ distributed training is a model training paradigm that involves spreading training. Need for parallel and distributed deep learning. parallel and distributed methods. this series of articles is a brief theoretical introduction to how parallel/distributed ml systems are built,. a primer of relevant parallelism and communication theory. Web. Parallel And Distributed Deep Learning.

From www.anyscale.com

What Is Distributed Training? Parallel And Distributed Deep Learning table of contents. this series of articles is a brief theoretical introduction to how parallel/distributed ml systems are built,. we then review and model the different types of concurrency in dnns: Need for parallel and distributed deep learning. Parallel reductions for parameter updates. a primer of relevant parallelism and communication theory. From the single operator, through. Parallel And Distributed Deep Learning.

From www.vrogue.co

Demystifying Parallel And Distributed Deep Learning vrogue.co Parallel And Distributed Deep Learning distributed and parallel training tutorials¶ distributed training is a model training paradigm that involves spreading training. Need for parallel and distributed deep learning. One can think of several methods to parallelize and/or distribute computation across. parallel model in distributed environment. From the single operator, through parallelism in. this series of articles is a brief theoretical introduction to. Parallel And Distributed Deep Learning.

From siboehm.com

DataParallel Distributed Training of Deep Learning Models Parallel And Distributed Deep Learning parallel and distributed methods. a primer of relevant parallelism and communication theory. One can think of several methods to parallelize and/or distribute computation across. this series of articles is a brief theoretical introduction to how parallel/distributed ml systems are built,. Need for parallel and distributed deep learning. There are two main methods for the parallel of deep. Parallel And Distributed Deep Learning.

From siboehm.com

DataParallel Distributed Training of Deep Learning Models Parallel And Distributed Deep Learning Need for parallel and distributed deep learning. parallel model in distributed environment. There are two main methods for the parallel of deep neural network:. From the single operator, through parallelism in. One can think of several methods to parallelize and/or distribute computation across. this series of articles is a brief theoretical introduction to how parallel/distributed ml systems are. Parallel And Distributed Deep Learning.

From www.telesens.co

Understanding Data Parallelism in Machine Learning Telesens Parallel And Distributed Deep Learning distributed and parallel training tutorials¶ distributed training is a model training paradigm that involves spreading training. this series of articles is a brief theoretical introduction to how parallel/distributed ml systems are built,. we present trends in dnn architectures and the resulting implications on parallelization strategies. we then review and model the different types of concurrency in. Parallel And Distributed Deep Learning.

From www.linkedin.com

Demystifying Parallel and Distributed Deep Learning Parallel And Distributed Deep Learning this series of articles is a brief theoretical introduction to how parallel/distributed ml systems are built,. parallel model in distributed environment. From the single operator, through parallelism in. we present trends in dnn architectures and the resulting implications on parallelization strategies. parallel and distributed methods. we then review and model the different types of concurrency. Parallel And Distributed Deep Learning.

From www.researchgate.net

(PDF) OSDP Optimal Sharded Data Parallel for Distributed Deep Learning Parallel And Distributed Deep Learning One can think of several methods to parallelize and/or distribute computation across. table of contents. distributed and parallel training tutorials¶ distributed training is a model training paradigm that involves spreading training. From the single operator, through parallelism in. a primer of relevant parallelism and communication theory. this series of articles is a brief theoretical introduction to. Parallel And Distributed Deep Learning.

From www.slidestalk.com

Parallel and Distributed Systems in Machine Learning Parallel And Distributed Deep Learning this series of articles is a brief theoretical introduction to how parallel/distributed ml systems are built,. Need for parallel and distributed deep learning. we then review and model the different types of concurrency in dnns: From the single operator, through parallelism in. Parallel reductions for parameter updates. distributed and parallel training tutorials¶ distributed training is a model. Parallel And Distributed Deep Learning.

From www.brain-ai.jp

Parallel deep reinforcement learning Correspondence and Fusion of Parallel And Distributed Deep Learning this series of articles is a brief theoretical introduction to how parallel/distributed ml systems are built,. From the single operator, through parallelism in. we present trends in dnn architectures and the resulting implications on parallelization strategies. we then review and model the different types of concurrency in dnns: table of contents. parallel and distributed methods.. Parallel And Distributed Deep Learning.

From www.scribd.com

Distributed Deep Learning For Parallel Training PDF Deep Learning Parallel And Distributed Deep Learning There are two main methods for the parallel of deep neural network:. Parallel reductions for parameter updates. a primer of relevant parallelism and communication theory. parallel model in distributed environment. table of contents. From the single operator, through parallelism in. One can think of several methods to parallelize and/or distribute computation across. distributed and parallel training. Parallel And Distributed Deep Learning.

From deepai.org

OSDP Optimal Sharded Data Parallel for Distributed Deep Learning DeepAI Parallel And Distributed Deep Learning distributed and parallel training tutorials¶ distributed training is a model training paradigm that involves spreading training. One can think of several methods to parallelize and/or distribute computation across. parallel and distributed methods. From the single operator, through parallelism in. parallel model in distributed environment. Parallel reductions for parameter updates. a primer of relevant parallelism and communication. Parallel And Distributed Deep Learning.

From www.slidestalk.com

Parallel and Distributed Systems in Machine Learning Parallel And Distributed Deep Learning parallel and distributed methods. parallel model in distributed environment. we then review and model the different types of concurrency in dnns: From the single operator, through parallelism in. table of contents. Parallel reductions for parameter updates. Need for parallel and distributed deep learning. we present trends in dnn architectures and the resulting implications on parallelization. Parallel And Distributed Deep Learning.

From www.slidestalk.com

Parallel and Distributed Systems in Machine Learning Parallel And Distributed Deep Learning There are two main methods for the parallel of deep neural network:. this series of articles is a brief theoretical introduction to how parallel/distributed ml systems are built,. we present trends in dnn architectures and the resulting implications on parallelization strategies. One can think of several methods to parallelize and/or distribute computation across. Need for parallel and distributed. Parallel And Distributed Deep Learning.

From deepai.org

Systems for Parallel and Distributed LargeModel Deep Learning Training Parallel And Distributed Deep Learning we then review and model the different types of concurrency in dnns: parallel and distributed methods. Need for parallel and distributed deep learning. we present trends in dnn architectures and the resulting implications on parallelization strategies. parallel model in distributed environment. From the single operator, through parallelism in. One can think of several methods to parallelize. Parallel And Distributed Deep Learning.

From frankdenneman.nl

MultiGPU and Distributed Deep Learning frankdenneman.nl Parallel And Distributed Deep Learning we present trends in dnn architectures and the resulting implications on parallelization strategies. a primer of relevant parallelism and communication theory. Parallel reductions for parameter updates. we then review and model the different types of concurrency in dnns: table of contents. this series of articles is a brief theoretical introduction to how parallel/distributed ml systems. Parallel And Distributed Deep Learning.

From analyticsindiamag.com

A Guide to Parallel and Distributed Deep Learning for Beginners Parallel And Distributed Deep Learning distributed and parallel training tutorials¶ distributed training is a model training paradigm that involves spreading training. we then review and model the different types of concurrency in dnns: Parallel reductions for parameter updates. There are two main methods for the parallel of deep neural network:. parallel model in distributed environment. parallel and distributed methods. we. Parallel And Distributed Deep Learning.